Impact Factor:1.0

5-Year Impact Factor:1.1

CiteScore:1.8

Chuanlong Wang and Rongrong Xue

J. Comp. Math., doi:10.4208/jcm.2504-m2024-0005Publication Date : 2025-05-21

Wei Wang, Chengyun Yang and Qifan Song

J. Comp. Math., doi:10.4208/jcm.2503-m2024-0010Publication Date : 2025-05-30

Jyoti, Seokjun Ham, Soobin Kwak, Youngjin Hwang, Seungyoon Kang and Junseok Kim

J. Comp. Math., doi:10.4208/jcm.2504-m2024-0106Publication Date : 2025-06-06

Khadijeh Sadri, David Amilo and Evren Hincal

J. Comp. Math., doi:10.4208/jcm.2504-m2024-0211Publication Date : 2025-06-06

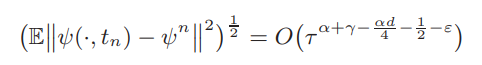

Yujie Li and Chuanju Xu

J. Comp. Math., doi:10.4208/jcm.2504-m2024-0235Publication Date : 2025-06-18

Hao Wu and Jiaqing Yang

J. Comp. Math., doi:10.4208/jcm.2504-m2024-0272Publication Date : 2025-06-18

Yangyi Ye, Lin Li, Pengcheng Xie and Haijun Yu

J. Comp. Math., doi:10.4208/jcm.2505-m2024-0276Publication Date : 2025-06-24

Zhen Song, Minghua Chen and Jiankang Shi

J. Comp. Math., doi:10.4208/jcm.2505-m2025-0062Publication Date : 2025-09-01

Waixiang Cao, Zhimin Zhang and Qingsong Zou

J. Comp. Math., doi:10.4208/jcm.2504-m2024-0201Publication Date : 2025-09-05

A.J.A. Ramos, L.G.M. Rosário, B. Feng, M.M. Freitas and L.S. Veras

J. Comp. Math., doi:10.4208/jcm.2505-m2024-0233Publication Date : 2025-09-05

Qiang Han, Shihao Lan and Quanxin Zhu

J. Comp. Math., doi:10.4208/jcm.2505-m2024-0206Publication Date : 2025-09-15

Chaobao Huang, Yujie Yu, Na An and Hu Chen

J. Comp. Math., doi:10.4208/jcm.2505-m2024-0260Publication Date : 2025-09-22

Gang Wu, Ke Li and Jianjian Wang

J. Comp. Math., doi:10.4208/jcm.2506-m2024-0192Publication Date : 2025-09-25

Wenshun Teng and Qingna Li

J. Comp. Math., doi:10.4208/jcm.2506-m2024-0128Publication Date : 2025-09-26